Self-Healing Data Pipelines: What It Means for Data-Driven Leaders?

For years, on-call systems were treated as a badge of operational maturity in data engineering. Alerts, rotations, and escalation paths were signs that a team was “serious” about reliability. In 2026, that belief no longer holds.

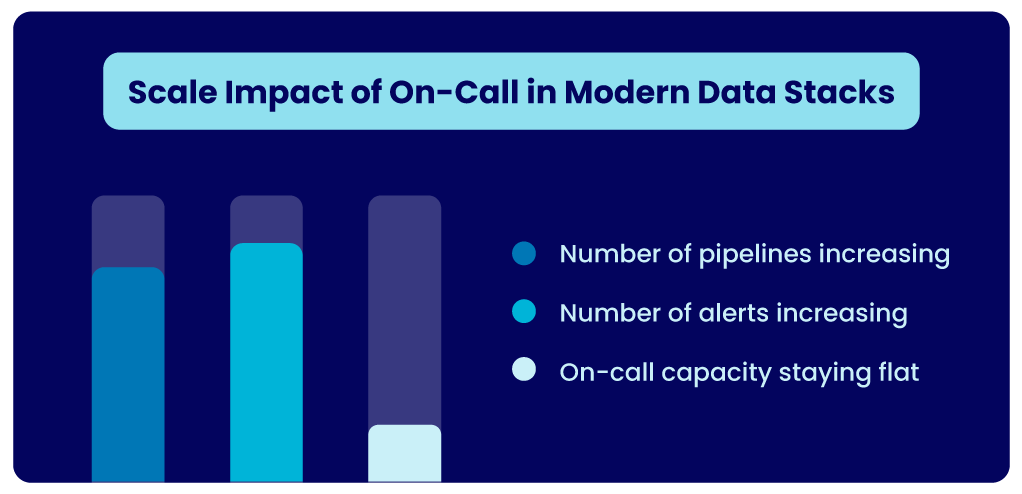

Data from 2025 made something painfully clear: traditional on-call models are burning engineering hours, amplifying business risk, and failing to keep pace with modern data stacks. Pipelines are more distributed, dependencies are more complex, and failure modes are more subtle than ever before.

Meanwhile, organizations are expected to make faster decisions on increasingly automated, AI-driven insights. When data reliability breaks, the cost isn’t just technical; it’s strategic.

That’s why forward-looking teams are moving beyond on-call systems and adopting self-healing data pipelines: systems that not only detect failures but also diagnose, repair, and prevent them automatically. And in 2026, this shift is critical.

The Hidden Cost of On-Call Data Ops

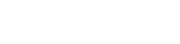

At their core, on-call systems are reactive.

What On-Call Systems Really Do

They:

- Trigger alerts after failures occur

- Provide limited context about root causes

- Rely on human engineers to investigate, fix, and validate issues

Even the best alerting setups still assume one thing: a human must intervene. Engineers get paged for schema changes they didn’t anticipate, upstream delays they don’t own, or data anomalies that require deep context to understand.

Over time, alert fatigue sets in, and truly critical issues are harder to distinguish from noise. On-call doesn’t prevent failures; it just responds to them.

The Human Cost

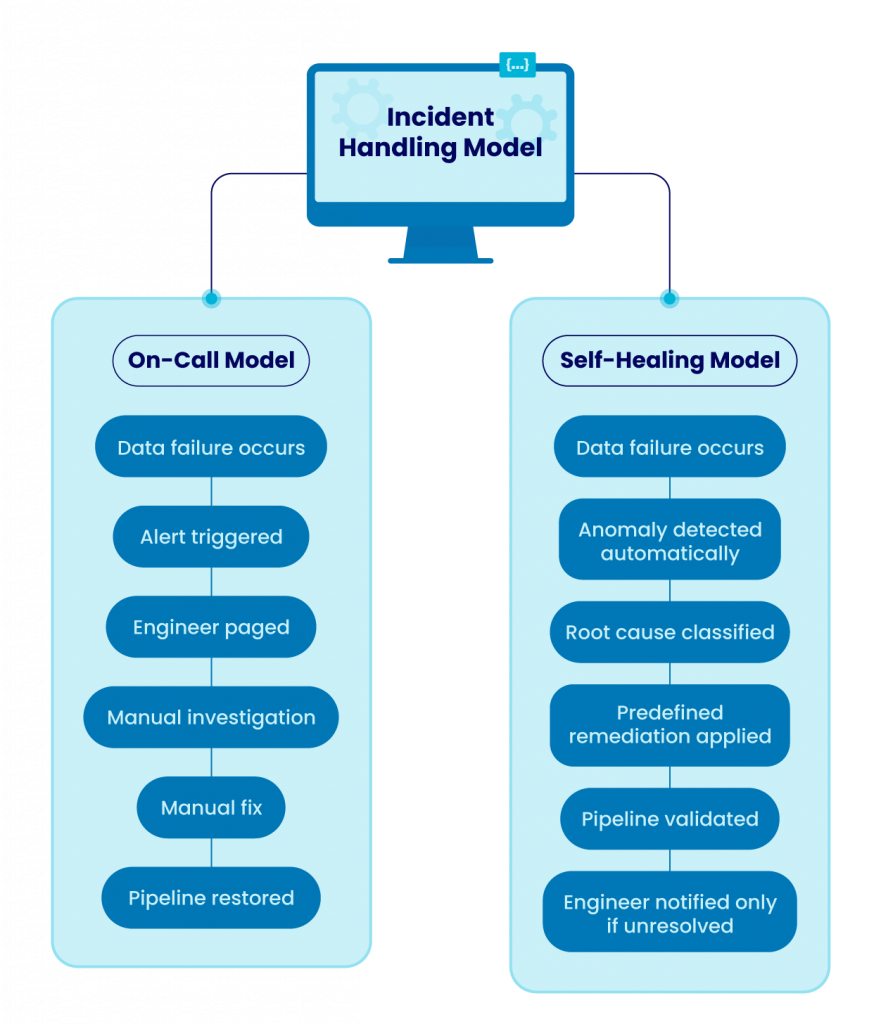

Across the industry, data engineering teams spend a significant portion of their time debugging and manually fixing pipelines.

This work is:

- Interrupt-driven

- Hard to automate with scripts alone

- Often repetitive and low-leverage

Instead of building new capabilities, engineers are pulled into reactive loops, triaging alerts, rerunning jobs, validating downstream dashboards, and documenting incidents that will likely happen again.

Burnout isn’t a side effect of on-call data ops. It’s a predictable outcome.

The Business Cost

When pipelines break, the impact ripples far beyond engineering.

Broken or delayed data leads to:

- Decisions made on stale or incorrect information

- Missed revenue opportunities

- Delayed product launches and experiments

- Loss of trust in analytics and reporting

At scale, on-call becomes one of the most expensive hidden taxes in the data stack.

What Are Self-Healing Data Pipelines?

Self-healing data pipelines represent the next evolution in DataOps and Data Reliability Engineering.

Definition

Self-healing pipelines automatically detect, diagnose, and correct data issues, often without human intervention. Instead of waiting for an alert and paging an engineer, these systems are designed to resolve common failure modes on their own.

How Self-Healing Pipelines Work

A modern self-healing pipeline typically includes:

- Real-time observability and anomaly detection

Monitoring freshness, volume, schema changes, and distribution shifts continuously. - Automated remediation

Triggering retries, rolling back changes, adjusting transformations, or applying predefined corrective actions. - Selective human escalation

Engineers are notified only when automated remediation fails or when strategic judgment is required.

The goal isn’t to remove humans from the loop entirely; it’s to remove them from routine, repeatable failures.

The AI & DataOps Backbone

What makes self-healing pipelines viable at scale is intelligence.

Machine learning models are increasingly:

- Identify early signals of pipeline degradation

- Forecast likely failure patterns

- Recommend or apply fixes before the downstream impact occurs

In 2025, a clear trend emerged: data platforms began combining observability with automated remediation, moving from reactive alerting to predictive reliability.

This shift mirrors what happened in infrastructure and SRE years earlier, only now, it’s happening in data.

Why Self-Healing Pipelines Are Replacing On-Call Systems in 2026

Self-healing pipelines don’t just reduce incidents; they reduce exposure.

Better Reliability by Design

Failures are detected earlier and resolved faster (often invisibly), downstream consumers see fewer outages, fewer broken dashboards, and more consistent data availability. Observability tools are no longer just telling teams something broke. They’re enabling systems to adapt automatically.

Faster Time to Value for Engineering Teams

When engineers aren’t constantly interrupted, something important happens: velocity returns.

Teams can:

- Focus on building new data products

- Improve models and analytics

- Invest in long-term platform quality

Instead of spending nights and weekends firefighting, engineers spend their time designing systems that fail less often in the first place.

Lower Operational and Opportunity Cost

Self-healing pipelines dramatically reduce:

- Manual incident handling

- Mean time to resolution

- Downtime-driven revenue loss

Most importantly, they allow data teams to scale without scaling on-call burden.

Real-World Use Cases

As pipelines multiply, human capacity need not grow linearly. Automation absorbs the complexity.

E-commerce at Peak Scale

During high-traffic events such as Black Friday, schema changes, and unexpected data spikes are common. Self-healing pipelines can detect schema drift, automatically adjust transformations, and validate outputs within minutes without requiring an engineer to intervene.

Financial Data Integrity

In finance, missing or delayed data can have regulatory and reputational consequences. Self-healing systems flag anomalies in completeness or timeliness and apply corrective workflows before dashboards or reports are exposed to stakeholders.

Cloud-Native Analytics Platforms

Modern analytics stacks combine streaming, batch, and real-time workloads. Self-healing pipelines maintain availability by coordinating observability and remediation across layers, ensuring reliability even as architectures evolve.

How Companies Transition from On-Call to Self-Healing

You can’t heal what you can’t see!

Step 1: Establish Baseline Observability

The first step is instrumenting pipelines with clear metrics:

- Freshness

- Volume

- Schema changes

- Downstream impact

This creates a shared language for reliability.

Step 2: Introduce Targeted Automation

Next, teams automate resolution for known failure patterns:

- Retries for transient issues

- Schema evolution handling

- Safe rollbacks and validations

These automations immediately reduce noise and manual effort.

Step 3: Adopt AI-Driven Remediation

Over time, organizations layer in intelligence:

- Predictive alerts

- Root-cause classification

- Automated fixes with human oversight

This is where on-call shifts from operational to strategic.

The Future Outlook: 2026 and Beyond

Self-healing pipelines are becoming foundational for AI-powered enterprises. As more decisions are automated, the tolerance for unreliable data drops to zero. On-call systems will still exist, but only for rare, high-impact scenarios that truly require human judgment.

The system itself will handle routine failures, retries, and validations. That is the future of data reliability.

Your Data Should Work for You

Self-healing data pipelines aren’t about replacing engineers. They’re about freeing them.

Qualdo.ai helps teams move from reactive on-call cycles to proactive, automated data reliability. With built-in observability, AI-assisted remediation, and pipeline intelligence, Qualdo.ai is designed to ensure your data is trustworthy, without constant firefighting.

If your team is ready to retire noisy alerts and build systems that heal themselves, it’s time to rethink on-call.