Why Cloud Data Quality & Consistency Matter More Than Ever

Cloud adoption has gone from “forward-looking” to “much-needed.” By 2025, more than 94% of enterprises will be running workloads in the cloud (Flexera, 2025), and most have gone multi-cloud or hybrid. At the same time, SaaS applications, from Salesforce to HubSpot to Workday, have exploded, each producing critical business data without data quality. Add IoT and edge devices into the mix, and suddenly, your data is not centralized but scattered across hundreds of systems.

That scale is both exciting and risky. Today, the value of data lies not just in its accuracy or freshness but also in whether it is consistent everywhere it flows. If finance reports one revenue number in Azure, while marketing reports another from Salesforce, the organization loses trust. If an AI model is trained on inconsistent data, predictions suffer. If compliance teams can’t reconcile versions across regions, regulatory exposure rises.

The numbers speak loudly. Gartner estimated that poor data quality costs companies $12.9 million annually (2023), and that cost is climbing with AI adoption, where even minor inconsistencies can have massive ripple effects. In 2025, data quality in the cloud is really about ensuring consistency at scale.

1. Unique Challenges for Data Quality in the Cloud

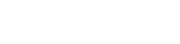

Keeping data consistent in one on-premises warehouse was hard enough. In the cloud, the complexity multiplies. Data doesn’t just move; it fragments. It sits across AWS, Azure, GCP, and SaaS providers, each using its own definitions, compliance frameworks, and service guarantees.

One of the biggest culprits is schema drift. Imagine your HR SaaS adds a new “preferred name” field to employee exports. That tiny change can break downstream analytics and integrations unless caught in time. Similarly, replication lag can wreak havoc, data written to your U.S. region may take minutes to show up in Europe, leading to mismatched reports.

The risks aren’t theoretical. In 2024, a global retailer saw a 15% inventory mismatch between its U.S. and Asia-Pacific warehouses. The cause: inconsistent replication policies across providers. The impact: overselling in one region and unsold inventory piling up in another.

To make matters worse, siloed applications use different standards, APIs evolve without notice, and compliance rules vary by geography. A latency gap of even a few seconds in financial services can raise audit flags. These aren’t just technical nuisances, they’re business-critical risks.

That’s why executives now frame the problem as “cloud data quality challenges 2025,” because the challenge is no longer just bad data, but keeping good data aligned across fragmented systems.

2. The Pillars of Cloud Data Quality and Consistency

So what does “good” look like? Cloud data quality rests on six essential pillars. Think of them as the checks every dataset must pass before it can be trusted:

- Accuracy – Values reflect the truth (e.g., revenue numbers match across systems).

- Completeness – No blank or missing values; critical fields are filled.

- Consistency – The same data looks the same everywhere, no conflicting versions.

- Timeliness/Freshness – Data is up to date, ideally in near real-time.

- Uniqueness – No unintended duplicates that skew analysis.

- Validity – Data conforms to expected rules (dates, email formats, ranges).

When any of these pillars collapse, trust collapses with them. And trust, in 2025, is the ultimate currency in data-driven enterprises.

3. Best Practices for Ensuring Data Quality and Consistency in the Cloud

Now to the most important part: how to actually achieve consistency. In practice, leaders are finding that nine best practices define success.

3.1. Establish Clear Cloud Data Quality Standards

Consistency begins with clarity. Every organization should define what “good” looks like in measurable terms. For example, customer data must have 95%+ completeness for phone and email fields, with less than 0.5% duplication across systems. These rules shouldn’t sit in a document no one reads; they should be codified into pipelines and tracked via dashboards.

3.2. Automate Data Validation and Quality Checks

Manual data reviews don’t scale in the cloud. Instead, validation should happen automatically at ingestion, transformation, and delivery. Qualdo™ can flag schema drift, nulls, or duplicates instantly. One fintech found that introducing automated CDC (change data capture) checks cut reconciliation time by 40% compared to manual processes.

3.3. Implement Cloud-Specific Data Governance

Governance is not bureaucracy, it’s insurance. In the cloud, it means defining ownership, standardizing definitions, and ensuring visibility of lineage. Without it, GDPR or HIPAA compliance becomes guesswork. You can integrate with cloud-native warehouses, making it easier to maintain end-to-end visibility.

3.4. Use Distributed and Real-Time Data Synchronization

Global companies can’t afford stale or mismatched data. Databases like Google Spanner, Amazon Aurora Global, or CockroachDB offer built-in consistency across regions. Meanwhile, event streaming with Kafka, Debezium, or Kinesis ensures real-time sync. The key metric: replication lag should stay under 100 milliseconds for mission-critical applications.

3.5. Standardize APIs and Interoperability

Every provider loves their own way of doing things. Your job: impose order. By using API gateways and schema validation, you can enforce consistency. Central version control helps prevent one team’s API change from breaking ten others downstream.

3.6. Strengthen Security, Privacy, and Compliance Uniformly

It’s not enough to have strong IAM in AWS but weak controls in GCP. Security and compliance policies must be consistent across all clouds. Automating audits with tools like AWS Audit Manager or Azure Purview reduces manual overhead while ensuring regulatory readiness.

3.7. Build for Observability and Proactive Monitoring

You can’t fix what you can’t see. That’s why observability is the heartbeat of cloud data quality, providing anomaly alerts, lineage tracking, and pipeline health dashboards. Some organizations now treat observability like DevOps treats uptime: a non-negotiable part.

3.8. Appoint Data Stewards with Cloud Expertise

Technology alone won’t solve this. Appoint data stewards for critical domains who understand both data quality and cloud-native nuances. Their job: enforce standards, mediate conflicts, and guide teams on best practices.

3.9. Continuously Audit, Improve, and Evolve

Data ecosystems don’t sit still. Pipelines evolve, APIs change, and new SaaS tools arrive monthly. Continuous auditing, cross-source reconciliation, and even machine learning for predictive anomaly detection are now must-haves. One global bank saw fraud anomalies flagged 3x faster when ML models joined the quality arsenal.

4. Common Pitfalls and How to Avoid Them

The mistakes are as instructive as the best practices:

- Lifting legacy mess into the cloud → Always cleanse before migration.

- Ignoring reconciliation post-migration → Cloud-to-on-prem mismatches can haunt you later.

- Relying solely on manual monitoring → Impossible at scale.

- Neglecting schema/API versioning → Causes silent failures.

- Overlooking compliance differences → Risky in regulated sectors.

Avoiding these pitfalls requires discipline, but the payoff is exponential trust.

5. Future Trends in AI, Automation, and Cloud-Native Data Observability

The future of cloud data quality is less about humans finding problems and more about AI fixing them before anyone notices.

Qualdo™ uses machine learning to detect anomalies, track lineage, and even recommend auto-remediations. In the next few years, agentic AI could autonomously apply new data rules or heal broken pipelines.

Meanwhile, DevOps practices are bleeding into data. Governance-as-code is embedding quality checks directly into CI/CD pipelines, treating consistency as a deploy-time requirement. Observability will also move from add-on to native cloud feature, with built-in quality scores and trust dashboards available out of the box.

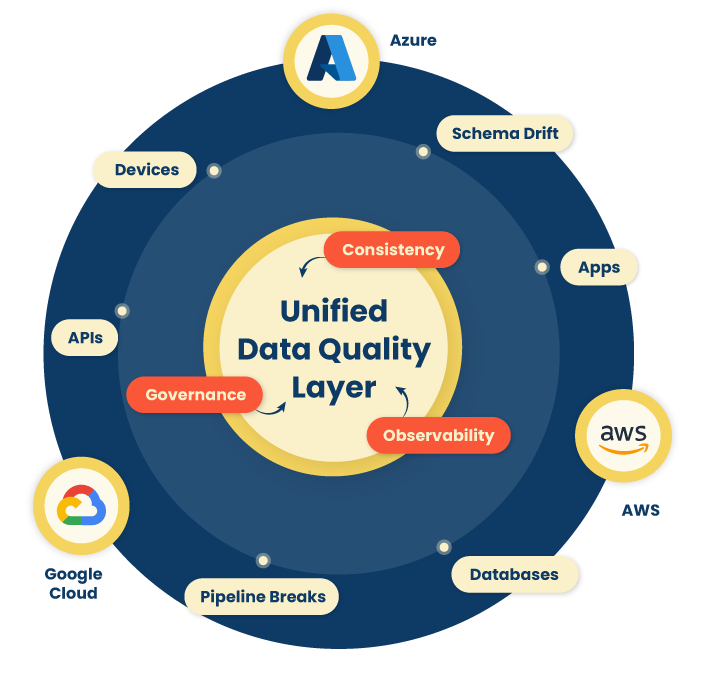

6. Actionable Checklist

A quick “sticky note” version for any enterprise starting today:

- Assess your cloud data landscape and key flows.

- Define and document unified standards.

- Automate validation and monitoring at all stages.

- Enable observability dashboards.

- Reconcile after migrations and continuously.

- Assign accountable stewards.

- Scale with AI-powered products like Qualdo.ai.

By 2025, consistency is the foundation for analytics, AI, and compliance. The organizations that thrive will treat it as a living discipline: automated, AI-driven, and continuously improved. Those who don’t will continue to waste time reconciling dashboards instead of innovating.

Consistency is the new competitive advantage. And in the cloud era, it’s the only way to turn fragmented data into a trusted asset.

Enterprises are increasingly adopting AI-powered products like Qualdo.ai to unify quality, reliability, and observability. The result: trusted pipelines, faster AI, and data-driven innovation without compromise.